Preparing Compute Nodes to Use SimpliVity DVP

With the efficiency SimpliVity’s Data Virtualization Platform (DVP) brings to data with accelerated inline deduplication, compression, and optimization SimpliVity customers often need to scale compute before they need to scale storage. As long as the SimpliVity environment is capable of providing the storage capacity and performance required the CPU and memory resources can be scaled out using what we call compute nodes. A compute node is simply a x86 server on VMware’s HCL which supports running ESXi. Compute nodes provide additional CPU and memory resources to the environment while consuming the SimpliVity DVP for storage. Using compute nodes is a native capability of the SimpliVty DVP and does not require any additional SimpliVity licensing.

Here is a conceptual diagram of a SimpliVity Datacenter leveraging a couple of HP DL360 Gen9 servers as compute nodes:

Another common deployment we see is for customers who have made an investment in blade servers, such as Cisco UCS B-Series environment. The blades can also be used as compute nodes with the SimpliVity DVP providing storage. This typically looks something like this for a UCS B-Series environment:

In both of these scenarios the SimpliVity datastores are presented to the compute nodes as NFS datastores.

Once the SimpliVity datastores are presented to the compute nodes, any workloads running on the SimpliVity datastores will receive all the features and advantages provided by the SimpliVity DVP. All data will be deduplicated, compressed, and optimized at inception. This means features such as SimpliVity Rapid Clone, SimpliVity Backup, and SimpliVity Restore will be available for the workloads even though they are not running directly on the SimpliVity nodes.

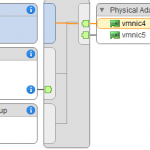

There are a couple of requirements for leveraging compute nodes. First off the compute nodes need to be connected to the SimpliVity storage network using 10GbE. Secondly ESXi running on the compute nodes needs to be configured with the correct advanced settings for TCPIP, NFS, and RPC. The setting for these options are dependent on the version of ESXi running on the compute nodes.

I have put together a PowerCLI script which will check the advanced settings required for the compute nodes and set them. The script can be found here: https://github.com/herseyc/SVT/blob/master/check-svtnfs.ps1

In the script there are number of variables defined dependent on the version of ESXi running on the compute nodes:

$SVTnettcpipheapmax = 1536 #ESXi 5.1 use 128, 5.5 use 512, 6.0 use 1536 $SVTnettcpipheapsize = 32 $SVTnfsmaxvolumes = 256 $SVTsunrpcmaxconnperip = 128

The above settings are for ESXi 6.x. If you are running the script against earlier versions of ESXi, update these variables to reflect those settings. The required settings for other supported versions of ESXi can be found in the SimpliVity Administrator Guide.

To run the script simply pass the host’s vCenter inventory name on the command line. The -CheckOnly option check the settings but does not change anything.

check-svtnfs.ps1 ESXi1 -CheckOnly

Run the script without the -CheckOnly parameter to change the advanced settings.

check-svtnfs.ps1 ESXi1

Here is an example after running check-svtnfs.ps1 against an ESXi host with the -CheckOnly parameter, then running it to make the changes, and finally running it with the -CheckOnly option to validate:

The changes can be made without placing the host in maintenance mode, but they will not take effect until after the host is rebooted.

Once the Advanced Settings have been configured, edit the /etc/hosts file on each ESXi host. Add an entry for omni.cube.io which points to the IP Address of an OmniCube Virtual Controller (OVC). Details on completing this can be found in the OmniStack Administration Guide.

The SimpliVity VAAI NFS Plugin is then installed on the compute node. The process for this is dependent on the version of ESXi/SimpliVity DVP and details for the installation can be found in the SimpliVity Administrator Guide. The VIB (vSphere Installation Bundle) for the SimpliVity VAAI NFS Plugin is included on the OmniCube Virtual Controller and is installed using the esxcli (n is the ESXi version (5/6) and o.o.o.o is the plugin/OmniStack version ie 3.0.8.5 for the latest).

esxcli software vib install --viburl=http://managementipaddressofovc/vaai/simplivity-esx-n.x-nas-plugin-o.o.o.o.vib

Once the compute nodes have been properly prepared the SimpliVity Datastores are presented to them using the vSphere Client. In the Web Client this is done using the Manage Standard ESXi Hosts dialog as shown below:

The compute nodes can now consume the SimpliVity DVP and provide additional CPU and memory resources to the datacenter.

Do you still need to edit the local host file on esxi for failover?

Dwayne,

Thanks for stopping by. Yes you do need to add the OVC storage address to the hosts file on the ESXi host. This is well documented in our deployment/administration documentation. Once configured it does not have to be changed to support failover.

Thanks

Hersey

Hi there and thanks for the article!

Sorry for the article necromancy, but what’s the failover strategy in this case? Is the NFS share exported from one OVC only? What happens if that OVC goes down for example?

If an OVC fails the storage IP used to mount the NFS datastore will failover to a surviving OVF. The NFS Datastore will remain accessible to the compute nodes.

Thanks for stopping by.

Hersey

Thanks for that – OK wasn’t sure of the mechanism but now I’ve discovered internally this is done by another OVC doing an ARP claim for the IP of the OVC that fell over – as long as omni.cube.io is used on the access node hosts file at least.

Any idea how to get compute nodes in another cluster to access Simplivities NFS storage?

Documentation says they have to be in the same Cluster.

The latest version of SimpliVity software (3.6.x) allows the SimpliVity datastore to be presented to compute nodes to be in the same datacenter but a different cluster.

Thanks

Hersey

You can also (really only for temporary reasons, not production, such as doing a migration) have support do an export of the datastore via command line to whatever host you like, even if not in the same vCenter. As long as the target host can access the MGMT or storage networks of the sharing OVC.

I found the solution and verified it:

It’s not working with the gui, but via CLI “svt-datastore-share” it still can be done.

Very good post!

Thanks

Hersey

What about the compute nodes Specifications? Should they match the Simplivity hosts or can you go a generation or two back? I don’t want the nodes to drag dow the system as far as performance.

I’m so glad I stumbled across your website!

The link between the compute note and SimpliVity, does the compute node need to be configured with a VMkernel port or anything?

Yes, the compute node must see OVC address “omni.cube.io” from the management IP address or storage IP address (it’s confirmed when you try to vmkping “omni.cube.io” from host after changing / etc / hosts file). that’s why we need a new vmkernel or an existing one.