SimpliVity Virtual Networking

Configuring virtual networking in a SimpliVity environment is simple and flexible. There are a few requirements for connectivity to support the SimpliVity Data Virtualization Platform (DVP) but beyond that the virtual/physical networking is configured as it would be in any vSphere environment.

This post provides an overview of the requirements of the virtual network to support the SimpliVity DVP along with details on different physical connectivity options to support management and virtual machine traffic.

The SimpliVity appliance is built on the HPE DL380 Gen10 (which I will also refer to as a SimpliVity node or host). By default the appliance contains two LOM network cards, a 4 port 1 GbE and a 2 port 10 GbE. The 10 GbE card is available with either SFP+ or 10Gbase-T ports. Up to 3 additional network cards can be added to an appliance to provide additional network connectivity. ESXi runs on the SimpliVity appliance and all network configuration is done through the vCenter managing the SimpliVity hosts.

Here is a list of guidelines/requirements/best practices for SimpliVity DVP networking:

- 10 GbE connectivity is required for SimpliVity DVP Storage traffic.

- The network supporting SimpliVity DVP Traffic should be configured for Jumbo Frames.

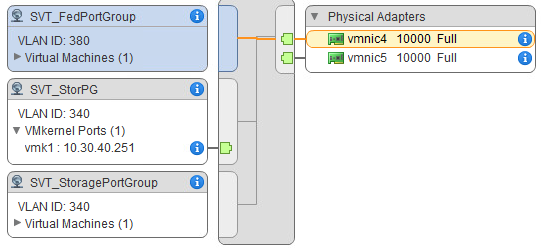

- For portgroups associated with SimpliVity DVP Storage Traffic and the ESXi vmkernel associated with the storage network network adapters should be configured Active/Standby.

- The OVC Storage IP Address and the ESXi Storage vmkernel IP Address are required to be on the same subnet across all nodes in cluster.

- The OVC Federation IP Address is required to be on the same subnet across all nodes in cluster.

- SimpliVity DVP Storage, SimpliVity Federation, Management, and VM Traffic should be logically separated.

- Network configurations should be consistent across all SimpliVity hosts in the same cluster.

- vSphere Standard vSwitches and vSphere Distributed vSwitches are supported.

- SDN, NSX for example, is supported for VM and management traffic.

Notice what is required and what should be configured. The required are just that, they are required to for the SimpliVity DVP to work properly. The should be are optimal configurations but are not specifically required.

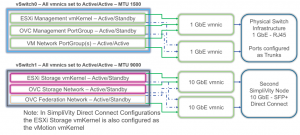

There are two options for the physical network connectivity of SimpliVity nodes to support DVP traffic, 10 GbE Direct Connect and 10 GbE Switched.

10 GbE Direct Connect

In a 2+ SimpliVity deployment, a single vSphere cluster contains only 2 SimpliVity hosts, the 10 GbEs can be directly connected to each other for the DVP communication between hosts (Storage, Federation). Direct Connect is supported with both 10 GbE SFP+ or the 10Gbase-T adapters.

In this type of deployment typically the vMotion vmkernel is placed on the vSwitch with the direct connects. By default the Storage vmkernel is also configured to support vMotion, but a separate vmkernel can be created for vMotion to allow some flexibility to send this traffic over a different vmnic from the storage traffic.

In a direct connect configuration the 4 x 1 GbE ports are available for northbound traffic (VM, Management, etc). These can be configured to the customer requirements for traffic separation (separating management from VM traffic) or network capacity. Additional NICs can also be added to support additional network connectivity requirements.

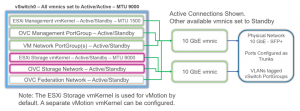

10 GbE Switched

In a vSphere cluster containing more than 2 SimpliVty hosts (or 2 SimpliVity hosts and Standard ESXi hosts consuming the DVP as compute nodes), the hosts must be connected via 10 GbE to a switch. In this type of deployment all traffic can be “converged” on the available 10 GbE vmnics.

Supporting VM Traffic and Management traffic really depends on the customer’s requirements. From a SimpliVity DVP standpoint we do not care as long as the DVP storage traffic is 10 GbE, direct connect or switched, between hosts in the same vSphere cluster and that the OVC management and ESXi management are reachable by each other and to/from the vCenter Server managing the vSphere cluster. Additional network adapters can be added to provide additional network capacity, additional network resiliency, and the ability to further separate network traffic as required.

SimpliVity is a scale out architecture, as the environment grows we add additional nodes (either SimpliVity hosts or Compute nodes) to scale out resources. As a SimpliVity environment grows it may be necessary to migrate from a 10 GbE Direct Connect to a 10 GbE Switched. This process is very simple and can be completed without impact to the workloads in the environment. I covered the migration process in the post SimpliVity Direct Connect to 10 GbE Switched Migration.

Question about deploying nodes with deployment manager when doing a SimpliVity deployment with 10GbE switched as in your second scenario, do you enter 1500 MTU for the management network and then 9000 for storage and federation? The documentation is a little confusing telling you when using all 10 GbE networking that MTU should be 9000 for all three networks?

John,

Thanks for stopping by. More than likely you will use 1500 for the Management network and 9000 for the Storage and Federation Networks. During the deployment the vSwitch will be configured for MTU 9000 and the management vmkernel will be set to 1500 (if that is what you configured in the wizard). This is a typical configuration. Remember Jumbo Frames for the storage and federation must be configured end-to-end.

Hersey

We are looking at a 2 node configuration for remote sites that don’t have a 10Gb switch. If we use DAC cables to connect the nodes shouldn’t we move the OVC Federation network to the 1Gb nics? If not it appears the traffic for replication goes over management. Thank you for writing these articles.

– Paul

Paul,

The Federation network on the 10 GbE NICs is for Federation communications between nodes, think of this as a heartbeat network between the two nodes. Replication between sites will use the management network. The management network is also used for Federation communications between the OVC and the Arbiter.

Hope that helps.

How should you configure LACP, should you use it or etherchannel? How does aggregation work with vSS and vDS?